Welcome @guylerdiscourse! Please add more information on your Jarvis version, the related notes model you're using, and try this debugging guide.

Hi @shikuz

I've just installed this and it seems pretty damn awesome. The ability to chat inside Joplin is quite awesome.

I'm doing my chats exclusively with Gemini models. Minor question - is there a way to configure Gemini 2.5 models?

More of an issue though seems to be that I am regularly not getting any responses and I see no easy way to debug this.

I am only using "Chat with Jarvis". I have a fairly large system prompt that I'm using (~400 lines).

Queries do work with a new note but after a couple of messages all I get is:

Generating response...

At this point I can't get any more messages in that note, I have to start a new one. Any idea what I'm doing wrong?

This plugin is very cool. I've got it set up with qwen3. Problem: I can't seem to get it to use the commands. I would like to use "Search:" OR "Notes:", but when I include them, Jarvis doesn't take them into account.

Do I need to do something to enable these commands?

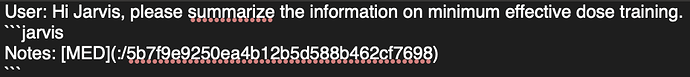

User: Hi Jarvis, please summarize the information on minimum effective dose training.

Notes: [MED](:/5b7f9e9250ea4b12b5d588b462cf7698)

It should look for that note, which is a summary of minimum effective dose training in a strength-barbell context, but it only searches it's own pre-baked understanding of what minimum effective dose training might be.

What's going on? Could it be conflicting with another plugin?

Update: I tried it with all the plugins turned off and it still isn't looking at the note. I'm using ollama and qwen3.

Thank you @ianchanning!

For gemini models, unlike OpenAI / Anthropic, I need to release a new version, and I plan to soon (models are released so frequently that it's hard to keep up).

As for errors, the plugin usually gives an error dialog for every error the model sends, but I think that in the case of Gemini it's not handled properly. I'll fix that, thanks for reporting.

Thanks @karl1!

Please try to write your prompt the following way (as shown here, in a code block):

In addition, please ensure that you use the command Chat with your notes(Alt + Ctrl/Cmd + C), which displays the text "Generating notes response..." while the model is working.

v0.10.1

- fixed: Gemini prompts always begin with user role (system message)

- improved: updated OpenAI models: gpt-4.1-nano, gpt-4.1-mini, gpt-4.1, o4-mini, o3

- improved: updated Claude models: claude-sonnet-4-0, claude-opus-4-0

- improved: updated Gemini models: gemini-2.5-flash, gemini-2.5-pro

- improved: error handling in Gemini

- improved: Ollama embeddings

- support API key (#45)

- support native, openai and legacy APIs

- improved: scroll cursor to line

- new: setting:

Notes: Scroll delay (ms)

v0.10.2

- new: setting:

Annotate: Keep existing tags

It is still not acting as expected.

User: Hi Jarvis, please summarize the information on minimum effective dose training.

```jarvis

Notes: [MED](:/5b7f9e9250ea4b12b5d588b462cf7698)

Jarvis:

Okay, let's tackle this. The user wants a summary of their thoughts about poetry based on the notes. Let me go through each note to find relevant info.

Note 1: Karl mentions ...[lots of unrelated notes. No engagement with the actual note in the code block]

User:

Jarvis uses embeddings to search the content of your notes, and based on the context and prompt that you provide it looks for the most similar text chunks. You can see exactly the content that is sent to the model by placing your cursor after the prompt and running the command Preview chat notes context. I expect to see there (in purple) relevant chunks from the note you provided. If they are not relevant, or not lengthy enough, you can do a few things, depending on the capabilities of the models that you have access to:

- Send more excerpts to the model.

- Increase

Chat: Memory tokensin the settings inJarvis: Chat. - You need a model with a large context window for that.

- Increase

- Switch to a better embedding model than the default one.

- Send a larger fraction (perhaps even all) of the content of each note it finds (or given).

- Increase the 2 settings

Notes: Number of leading / trailing blocks to addinJarvis: Related notes.

- Increase the 2 settings

- Chunk your notes into smaller blocks (more specific representation), or larger ones to return longer consecutive blocks each time.

- Decrease or increase

Notes: Max tokensinJarvis: Related notes. - Note that this will require processing all your note database again!

- Decrease or increase

- If all else fails (although I don't think it should, if all settings are correct), use "Ask Jarvis" and copy paste the content of your specific single note into the prompt window, or chat with Jarvis at the bottom of the notes you would like to summarise.

I'd love to see examples of the use of the code block. I'm still not seeing any change in the context of my notes from using it. This is a very neat plugin!

Moving to llama3.1 from qwen appeares to have solved it. I'm enjoying very much!

Thanks for this very cool plugin. I recently installed it, added my openAI key, selected GPT-4o as the model, and otherwise left the settings as default. Something is obviously wrong as even basic questions return no answers. See example exchange below (in my actual prompt I included the client name). What might be wrong here?

My prompt:

Please find me all of my meeting notes related to meetings with the \client name\ and summarize them. Dont' include information from notes that aren't directly about \this client \ .

Jarvis: I don’t have access to your personal files or meeting notes unless you provide them here. If you paste your meeting notes or upload the relevant text, I can review and summarize all information directly related to meetings with \client \ . Please share the notes you’d like me to analyze!

User:

Thanks @myrison! Important to note ![]()

See this post as well for tips, and the Jarvis guide. Note that you could also define search queries for notes using Jarvis blocks, as described in the guide, perhaps it will help focusing on specific customer-related notes.

Hello, I can just imagine how great this plugin could be. Sadly I am stumbling on the first steps. I followed the Guide to run a local LM Studio Server model (joplin-plugin-jarvis/GUIDE.md at master · alondmnt/joplin-plugin-jarvis · GitHub).

I get the Error:

Incorrect API key provided: openai_a**_key. You can find...

It seems to me that it somehow matters what API key is set in the options, while it should not if I understood correctly. I just want to run it locally.

Any ideas how to solve or if it is a bug?

Edit: tried with qwen3-8b and google/gemma-3-12b in LM Studio server

Edit2: had to go back versions manually to v0.10.0 which worked fine, seems to be connected to Custom model endpoint and model ID settings ignored for chat completions (Ollama integration broken) · Issue #47 · alondmnt/joplin-plugin-jarvis · GitHub

Kind regards

I'm using Note_LLM and it works exactly like I expect it to work. I wrote a post about Joplin and used the tool to clean up my writing a bit.

Welcome @LLMBoyec! Thanks for reporting.

If there's a bug that was introduced after v0.10.0, I'd really like to find it.

The setup that I tested below appears to work (for me), so I'm unable to reproduce the issue.

That said, I did find that error messages from LM Studio could be handled better in the plugin, so thank you for that.

I currently have:

- LM Studio v0.3.16 (running gemma-3-1b, but I don't think it matters)

- Jarvis v0.10.2, with the following settings:

Model: OpenAI API Key: AnythingChat: Model: OpenAI-compatible custom modelChat: OpenAI (or compatible) custom model ID: google/gemma-3-1bChat: Custom model is a conversation model: EnabledChat: Custom model API endpoint: http://127.0.0.1:1234/v1/chat/completionsNotes: Semantic similarity model: Universal Sentence Encoder, for the purpose of this test